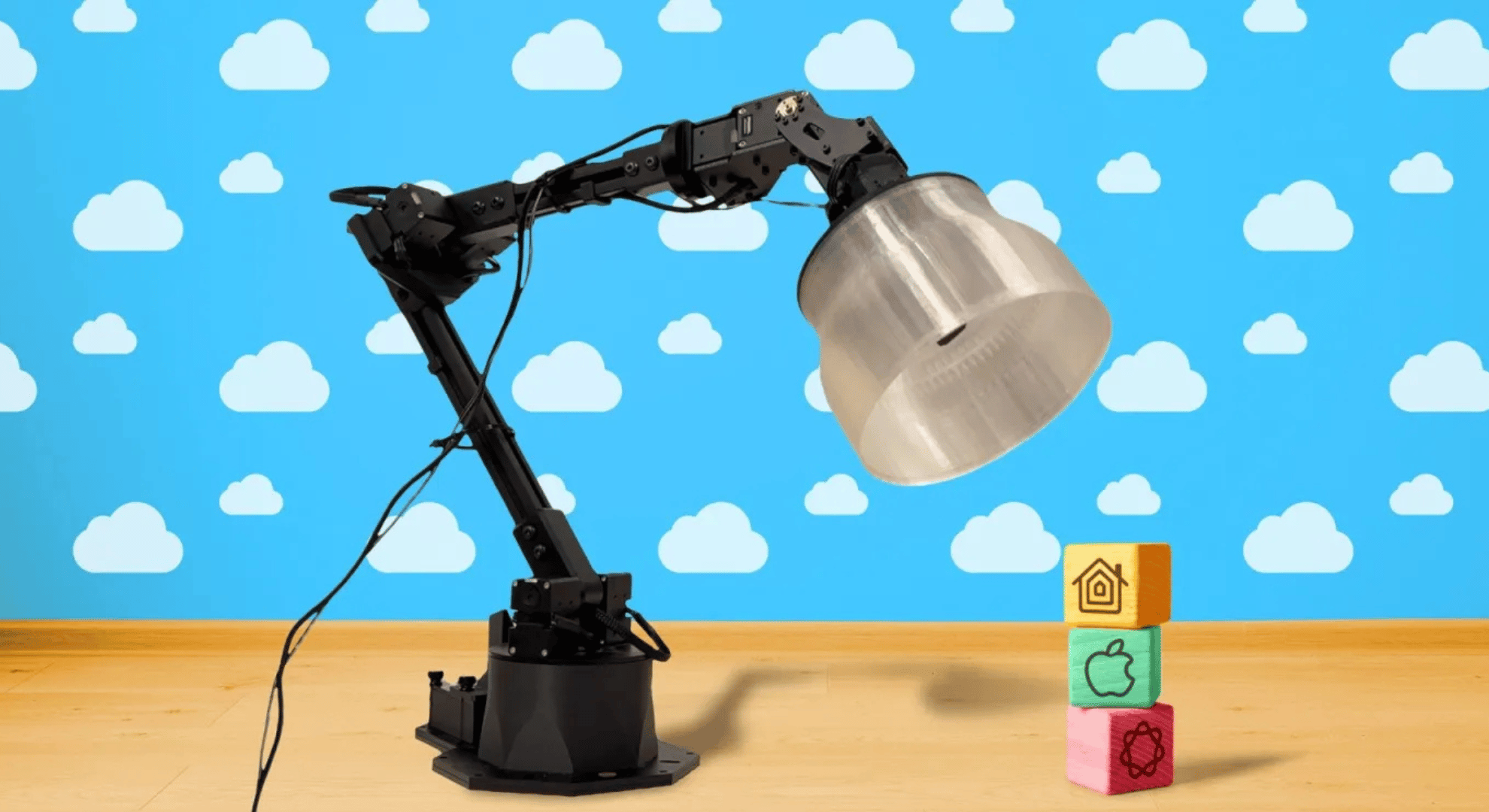

Apple recently published a research paper on consumer robotics that emphasizes the importance of expressive movements in optimizing human-robot interaction. To illustrate this point, the company is paying homage to Pixar’s iconic lamp with its own lifelike lamp robot.

“Like most animals,” states the report, “humans are highly sensitive to motion and subtle changes in movement.”

Apple’s creation mimics the analogous parts of Pixar’s version, with the lampshade acting as the robot’s head and the arm as its neck. The robot functions as a more expressive version of a smart speaker where the user can ask questions and the robot will respond in Siri’s voice.

“Nonverbal behaviors such as posture, gestures, and gaze are essential for conveying internal states, both consciously and unconsciously, in human interaction. For robots to interact more naturally with humans,” the paper notes, “robot movement design should likewise integrate expressive qualities, such as intention, attention, and emotions, alongside traditional functional considerations like task fulfillment and time efficiency.”

A video released alongside the report shows a split-screen comparison of the more expressive vs. purely functional robot. For example, when asked about the weather, the functional robot simply states the weather, whereas the expressive robot mimics a human gesture by turning its head to look out the window before stating the weather.

Utilizing a research-through-design methodology, the team documented the hardware design process, designed expressive movement primitives, and outlined a set of interaction scenario storyboards. The framework incorporates both functional and expressive utilities during movement generation and implements these behavior sequences into different tasks.

Researchers behind the project also conducted a user study comparing expression-driven and function-driven movements across six scenarios, finding that user engagement and perceived robot qualities were significantly greater with expression-driven movements.