Artificial intelligence-produced videos flood the internet. As technology improves, it’s getting harder to tell what is real and fake. Software engineers warn the public of deepfakes created by AI. However, AI is possibly the only defense against itself.

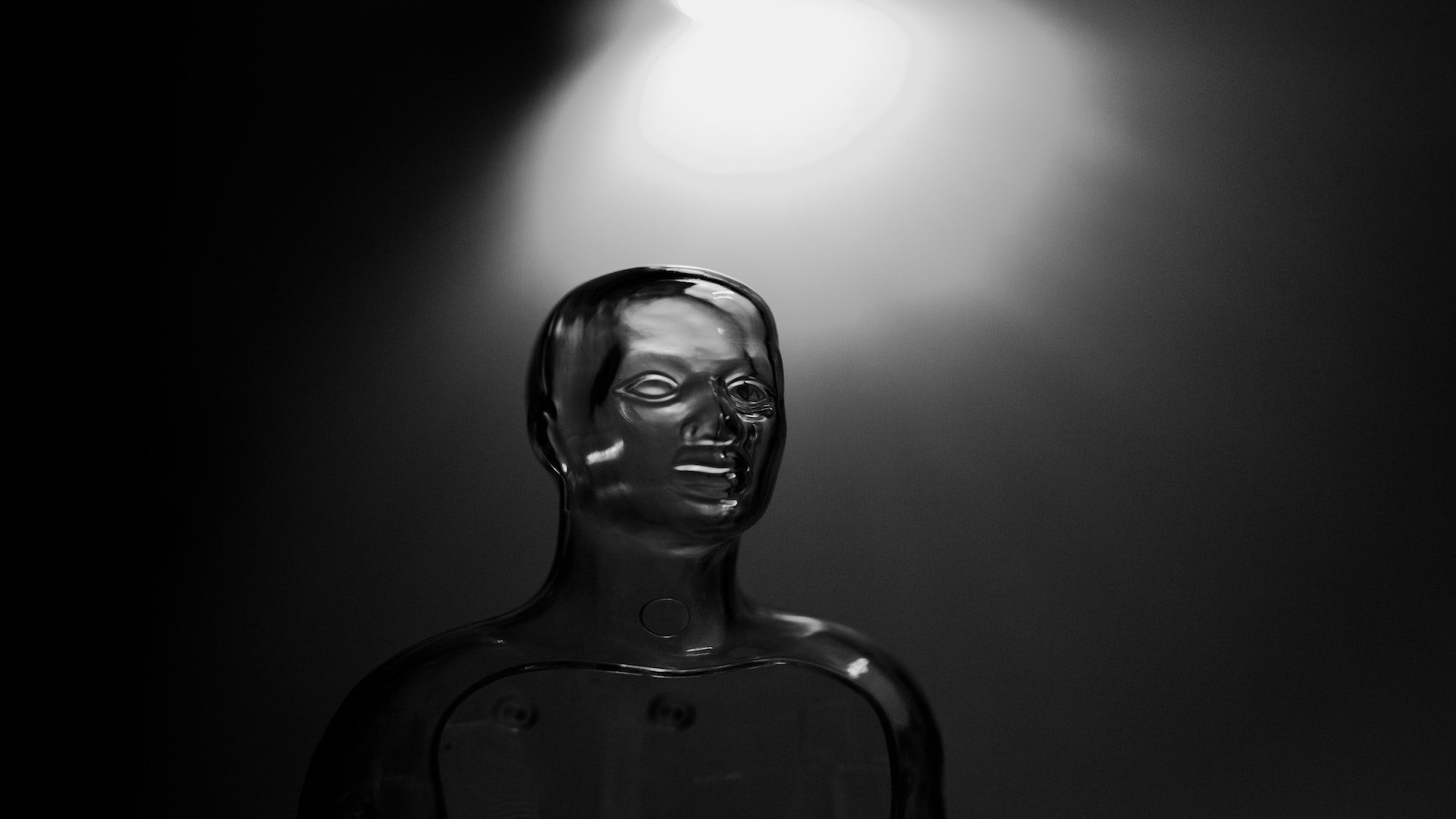

Deepfakes

Deepfakes are photos or videos created by AI and designed to fool us. It raises concerns about generative AI’s capabilities to create content that intentionally misleads the public. This new AI algorithm spots markers of AI videos with over 98% accuracy. The new model flags down deepfakes more accurately than any other tool on the market so far. Of course, there is blatant irony in AI protecting us against AI-generated content. According to new research from Drexel University, machine learning could be the key to catching the fakes.

Matthew Stamm is an associate professor in Drexel University’s College of Engineering. He said, “It’s more than a bit unnerving that this video technology could be released before there is a good system for detecting fakes created by bad actors.”

“Responsible companies will do their best to embed identifiers and watermarks, but once the technology is publicly available, people who want to use it for deception will find a way,” Stamm said. “That’s why we’re working to stay ahead of them by developing the technology to identify synthetic videos from patterns and traits that are endemic to the media.”

Explore Tomorrow's World from your inbox

Get the latest science, technology, and sustainability content delivered to your inbox.

I understand that by providing my email address, I agree to receive emails from Tomorrow's World Today. I understand that I may opt out of receiving such communications at any time.

Detecting Deepfakes

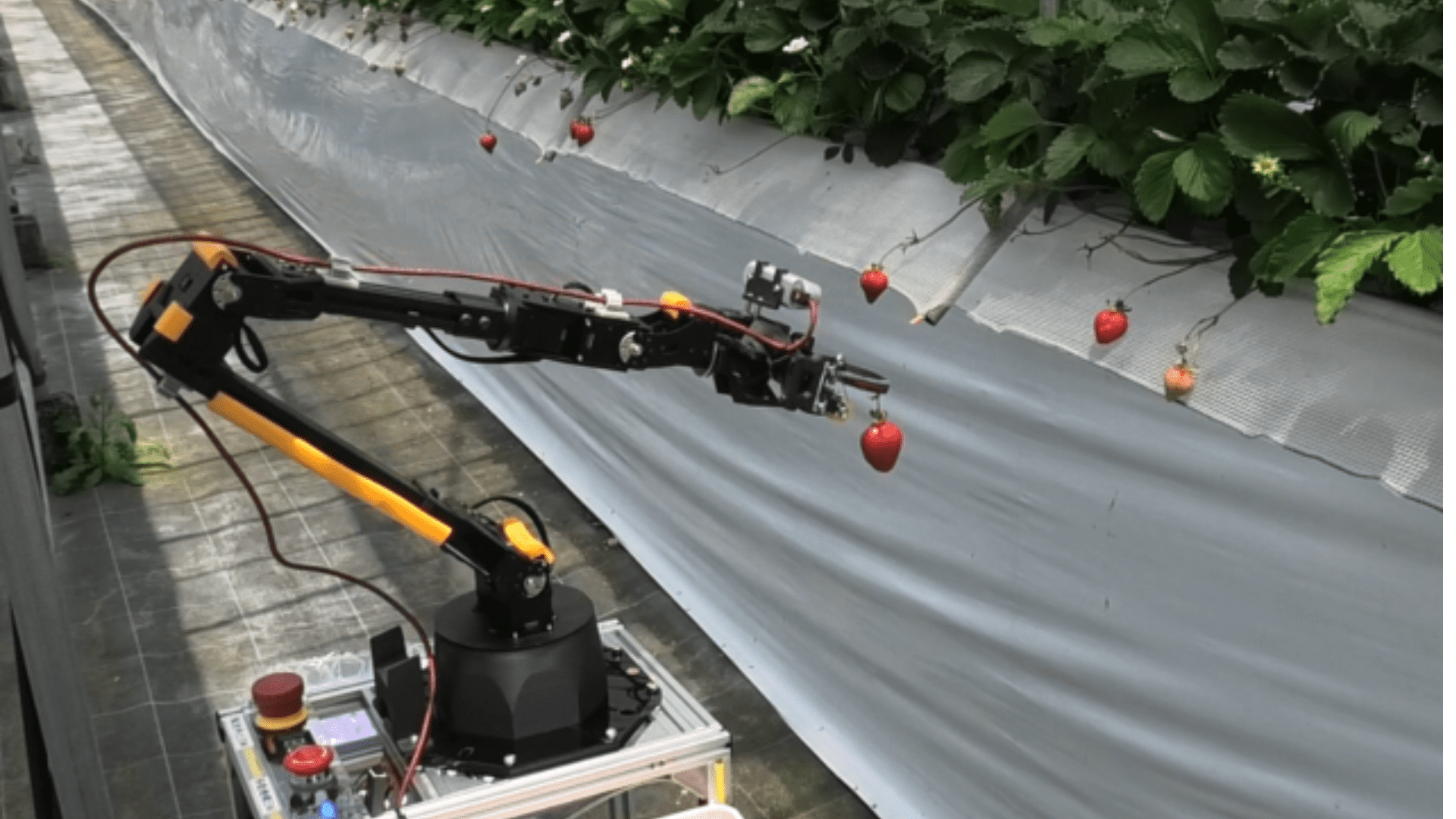

“Until now, forensic detection programs have been effective against edited videos by simply treating them as a series of images and applying the same detection process,” Stamm said. “But with AI-generated video, there is no evidence of image manipulation frame-to-frame, so for a detection program to be effective it will need to be able to identify new traces left behind by the way generative AI programs construct their videos.”

The breakthrough from Drexel University is an algorithm that detects fake images and video content. This new tool, called “MISLnet,” evolved from years of detecting fake images and videos with tools that spot manipulated media. That includes adding or moving pixels between frames, manipulating the speed of the clip, or removing frames.

There is a relationship between pixel color values created from a digital camera’s algorithmic processing, which allows such tools to work. Those relationships are different in user-generated content or images, like someone using Photoshop. Because AI images and video don’t use cameras, they don’t have disparities between pixel values.

Drexel’s MISLnet uses a method called constrained neural network. This method differentiates between normal and unusual values at the sub-pixel level of images or video clips. With a 98.3% accuracy, this algorithm outperforms seven other detection systems in the study.

“We’ve already seen AI-generated video being used to create misinformation,” Stamm said. “As these programs become more ubiquitous and easier to use, we can reasonably expect to be inundated with synthetic videos.”