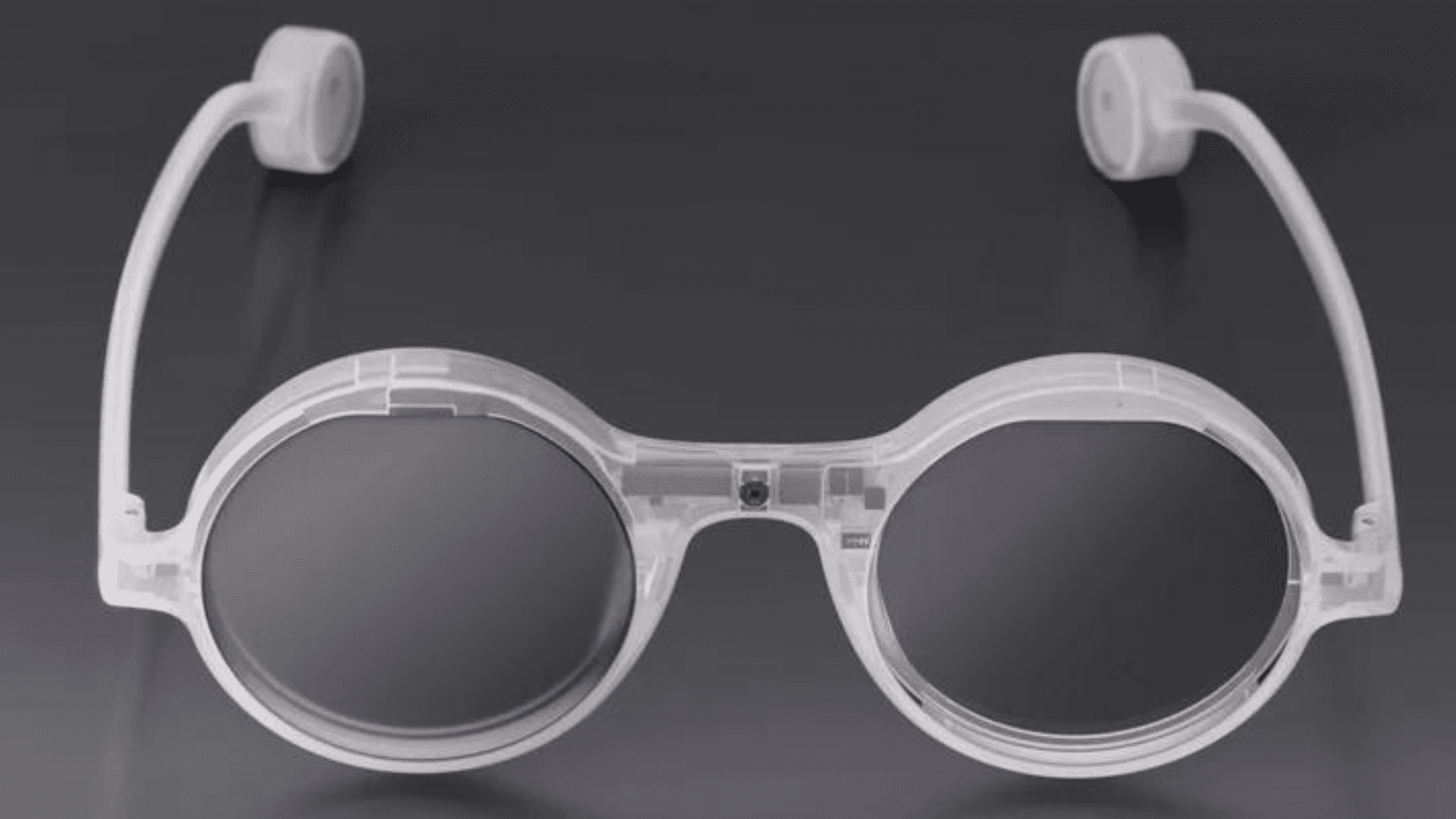

Meta announced new AI capabilities and smartphone features for its Ray-Ban Meta Smart Glasses later this year.

A few of the new features that the glasses will sport include real-time AI video processing, live language translation, QR code scanning, reminders, and integrations with apps such as iHeartRadio, Amazon Music, and Audible.

The AI video processing feature will allow users to ask Ray-Ban Smart Glasses questions about what they’re seeing, and Meta AI will verbally answer them in real time. The current technology can only take a picture and describe or answer questions about it, whereas the new version will make the experience more natural.

A demo showcases users asking Ray-Ban Meta questions about a meal they were cooking or a city scene in front of them. The real-time video capabilities allow Meta’s AI to process live action and respond audibly.

Additionally, English-speaking users can converse with someone speaking Italian, French, or Spanish, and their Ray-Ban Meta glasses will translate what the other person is saying into the language of their choice. Though more languages will be available later, this feature is designed to make it easier to communicate across language barriers.

The company is also adding some phone capabilities, such as alerts/reminders, but these become more advanced when used in concert with the AI video processing feature. For example, in a demo, a user asked their Ray-Ban Meta glasses to remember a jacket they were looking at and share the image with a friend later on.

Integrating iHeartRadio, Amazon Music, and Audible will make listening to music, audiobooks, or podcasts on the glasses’ built-in speakers easier. Finally, users can simply ask their glasses to scan something, and the Ray-Ban Meta glasses will automatically scan and open a QR code on a person’s phone.

The smart glasses will be available in a range of new Transitions lenses, which also respond to ultraviolet light to adjust the brightness of the user’s surroundings. Though we’ve previously seen demonstrations of real-time AI video capabilities from Google and Open AI, Meta would be the first to launch the features in a consumer product.