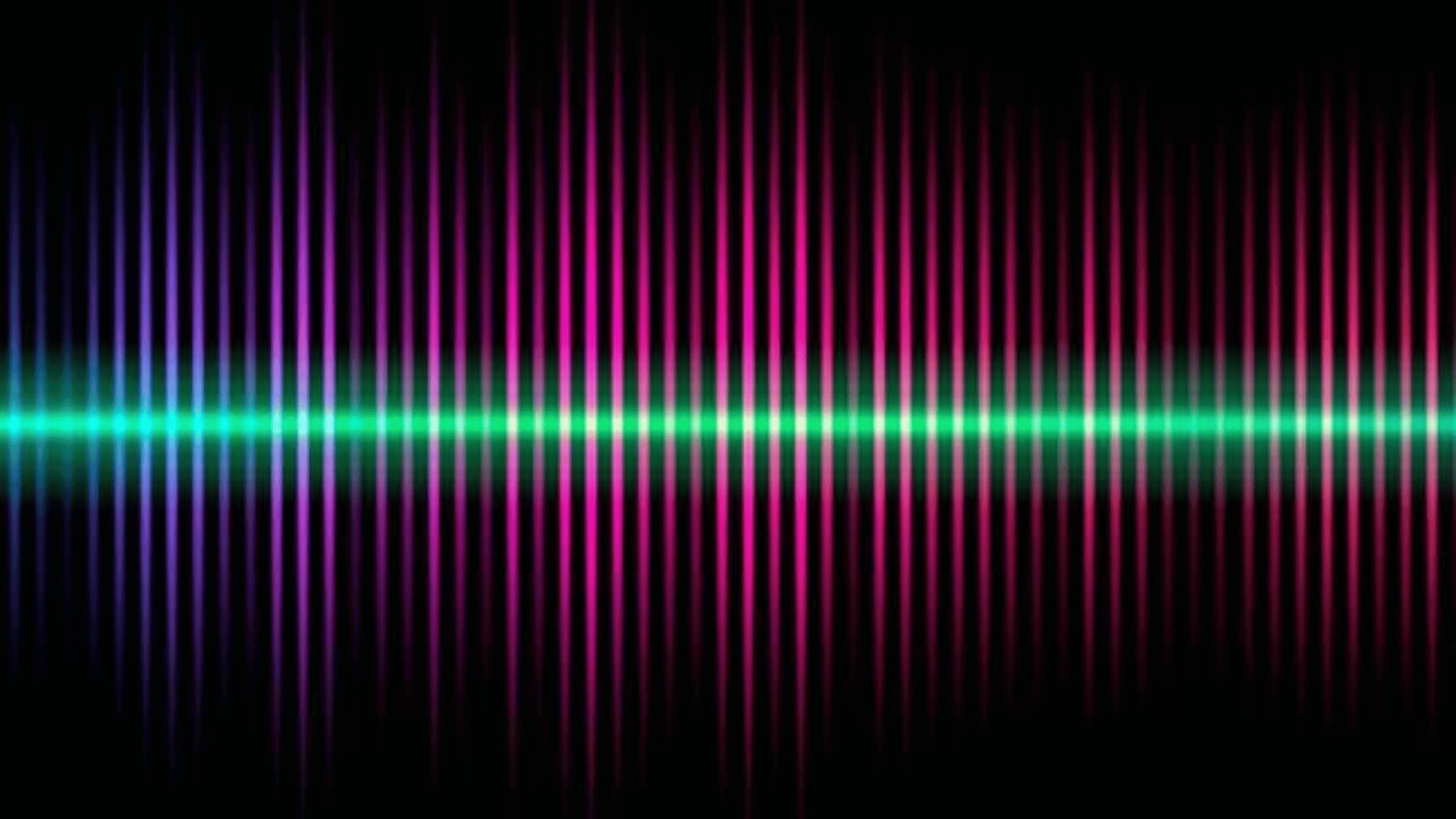

Scientists have recently created headphones that use AI to focus on one external sound source and block out all other noises.

The new technology, called “Target Speech Hearing, ” uses artificial intelligence to let the user face a speaker nearby and lock onto their voice. This allows the wearer to single out a specific audio source and retain the signal even if the speaker moves or turns away.

Explore Tomorrow's World from your inbox

Get the latest science, technology, and sustainability content delivered to your inbox.

I understand that by providing my email address, I agree to receive emails from Tomorrow's World Today. I understand that I may opt out of receiving such communications at any time.

The device comprises a small computer that can be embedded in a pair of commercial headphones. It uses signals from the headphones’ built-in microphone to select and identify a specific speaker’s voice.

Through the new technology and corresponding paper, which was published in the journal Proceedings of the CHI Conference on Human Factors in Computing Systems, researchers hope to help hearing-impaired people. They also work toward embedding the system into commercial earbuds and hearing aids.

“We tend to think of AI now as web-based chatbots that answer questions,” said study lead author Shyam Gollakota, professor of Computer Science & Engineering at the University of Washington. “In this project, we develop AI to modify the auditory perception of anyone wearing headphones, given their preferences. With our devices you can now hear a single speaker clearly even if you are in a noisy environment with lots of other people talking.”

This research follows the same scientists’ research into “semantic hearing,” which involved creating an AI-powered smartphone app that would let the wearer choose to hear from a list of preset sound “classes” and cancel out other noises. For example, the app would allow headphones to single out the noise of sirens or birds and block out other noises.

To use the technology, the wearer simply faces straight in front of the speaker whose voice they want to target while tapping a small button on the headphones. When the speaker’s voice reaches the microphone, the AI software “enrolls” the audio source and registers its vocal patterns.

Once the software recognizes the correct voice and locks onto it, the speaker will be able to hear it regardless of what direction they’re facing. Since the system better identifies unique patterns over time, the more the system hears the speaker talk, the more its ability to focus on the sound will improve.