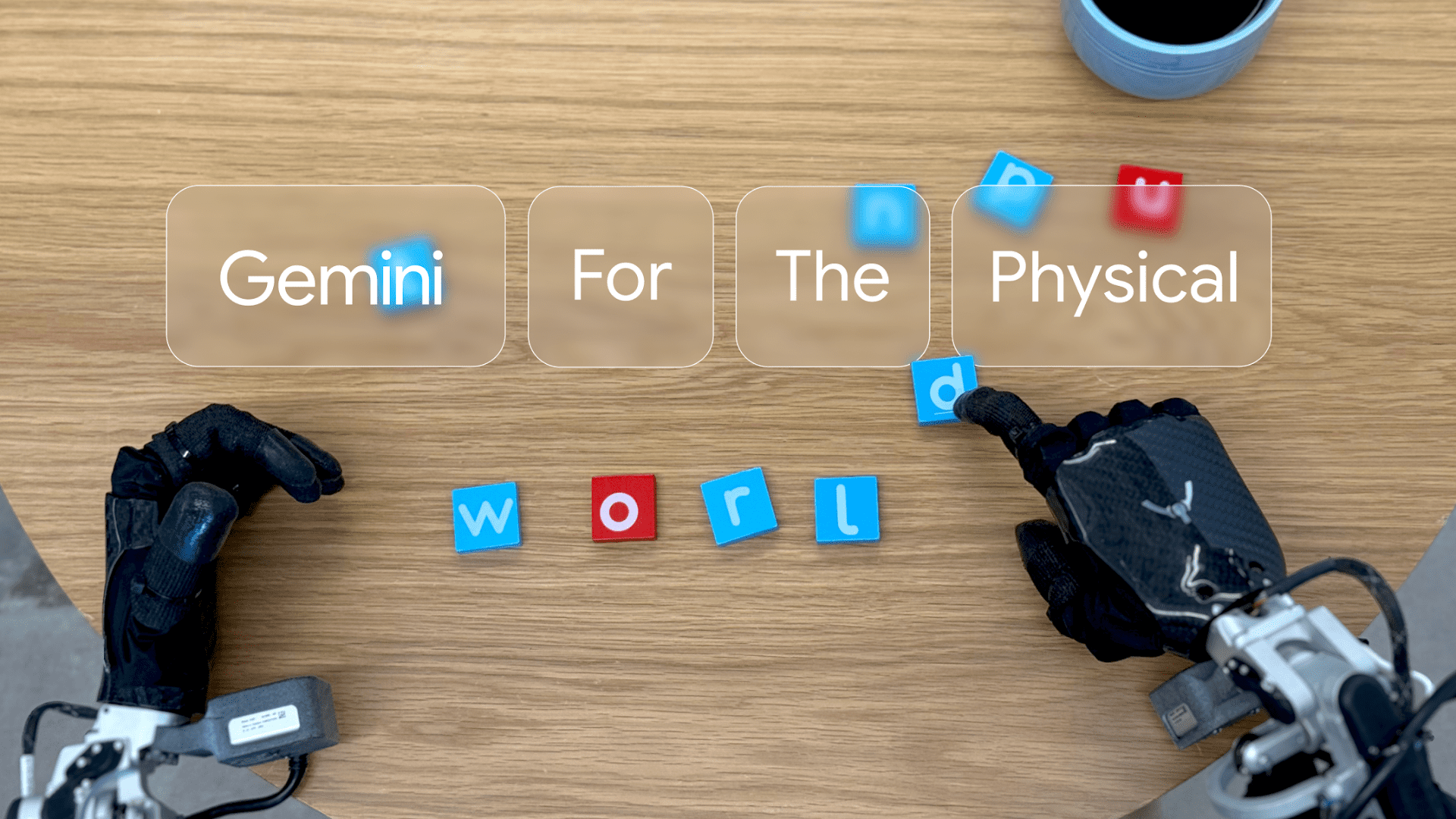

Google DeepMind, Google’s AI research lab, is expanding its Gemini models to solve complex problems across text, images, audio, and video. The tech giant announced two new AI models, based on Gemini 2.0, that lay the foundation for next-generation robots.

Google Gemini Robotics

The two new models are Gemini Robotics and Gemini Robotics-ER.

Gemini Robotics is an advanced vision-language-action (VLA) model built on Gemini 2.0 with additional physical actions “as a new output modality for the purpose of directly controlling robots.” Moreover, Gemini Robotics-ER is a model with spacial understanding, “enabling roboticists to run their own programs using Gemini’s embodied reasoning (ER) abilities.”

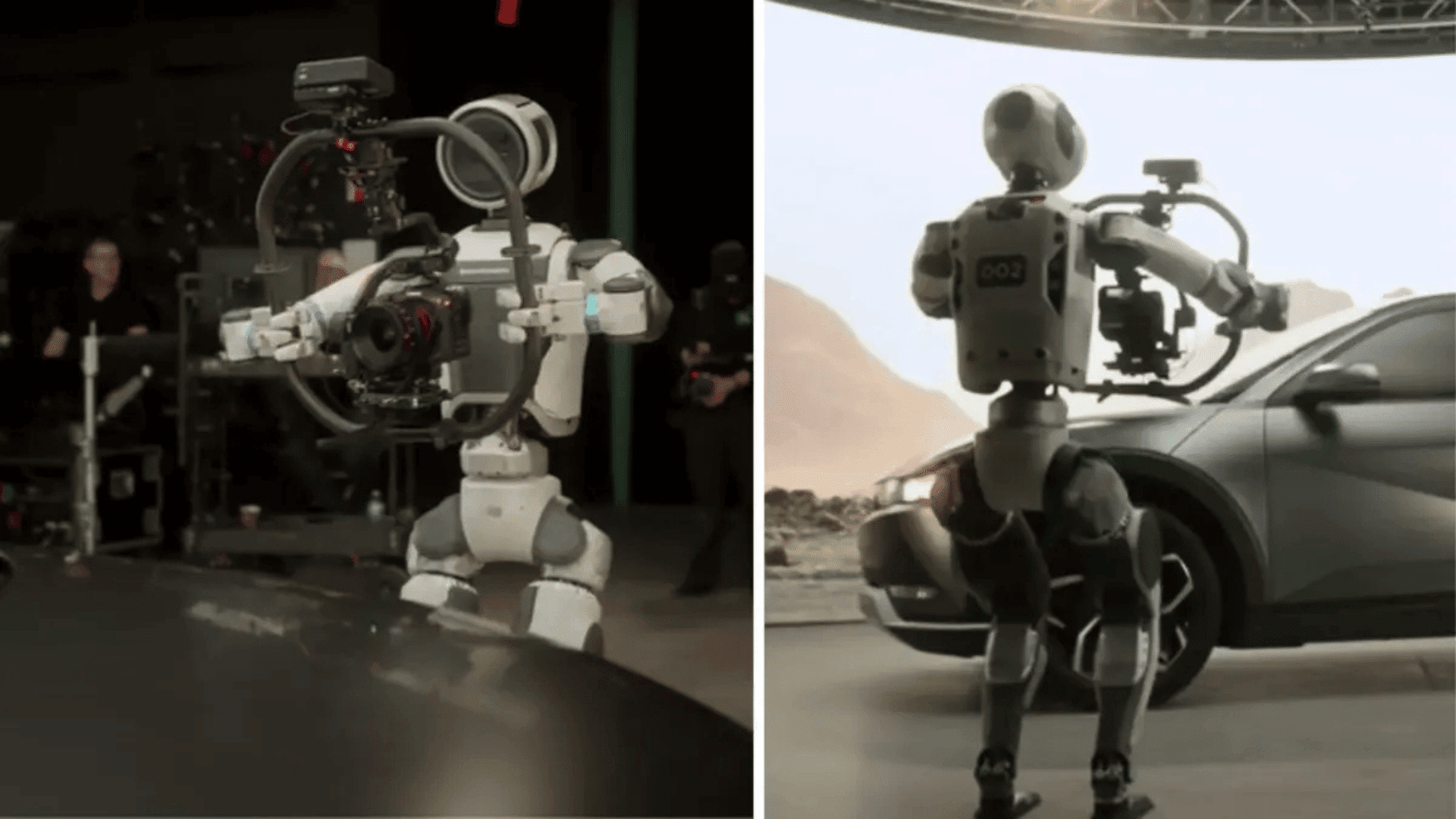

According to Google’s press release, the models enable robots to achieve a wider range of “real-world tasks.” In addition, the company is partnering with robotics developer Apptonik to create a new generation of humanoid robots using Gemini 2.0.

The AI models for robots need three principal qualities to be effective: Generality, interactivity, and Dexterity.

Generality

According to Google, the new models take a significant step forward in these areas. Being general means that the robots must adapt to different situations. This includes solving a wide variety of tasks never seen before in training.

Google says, “In our tech report, we show that on average, Gemini Robotics more than doubles performance on a comprehensive generalization benchmark compared to other state-of-the-art vision-language-action models.”

Interactivity

AI-powered robots must seamlessly interact with people and the surrounding environment to operate in real-world environments. They must also adapt to changes on the fly. “Because it’s built on a foundation of Gemini 2.0, Gemini Robotics is intuitively interactive,” Google says.

Gemini’s advanced language understanding capabilities allow it to respond to everyday commands and phrases in different languages. “It can understand and respond to a much broader set of natural language instructions than our previous models, adapting its behavior to your input.”

Dexterity

Dexterity is the final pillar of a helpful AI robot. Fine motor skills are a requirement for everyday tasks but are still too difficult for robots. “By contrast,” Google says, “Gemini Robotics can tackle extremely complex, multi-step tasks that require precise manipulation, such as origami folding or packing a snack into a Ziploc bag.”