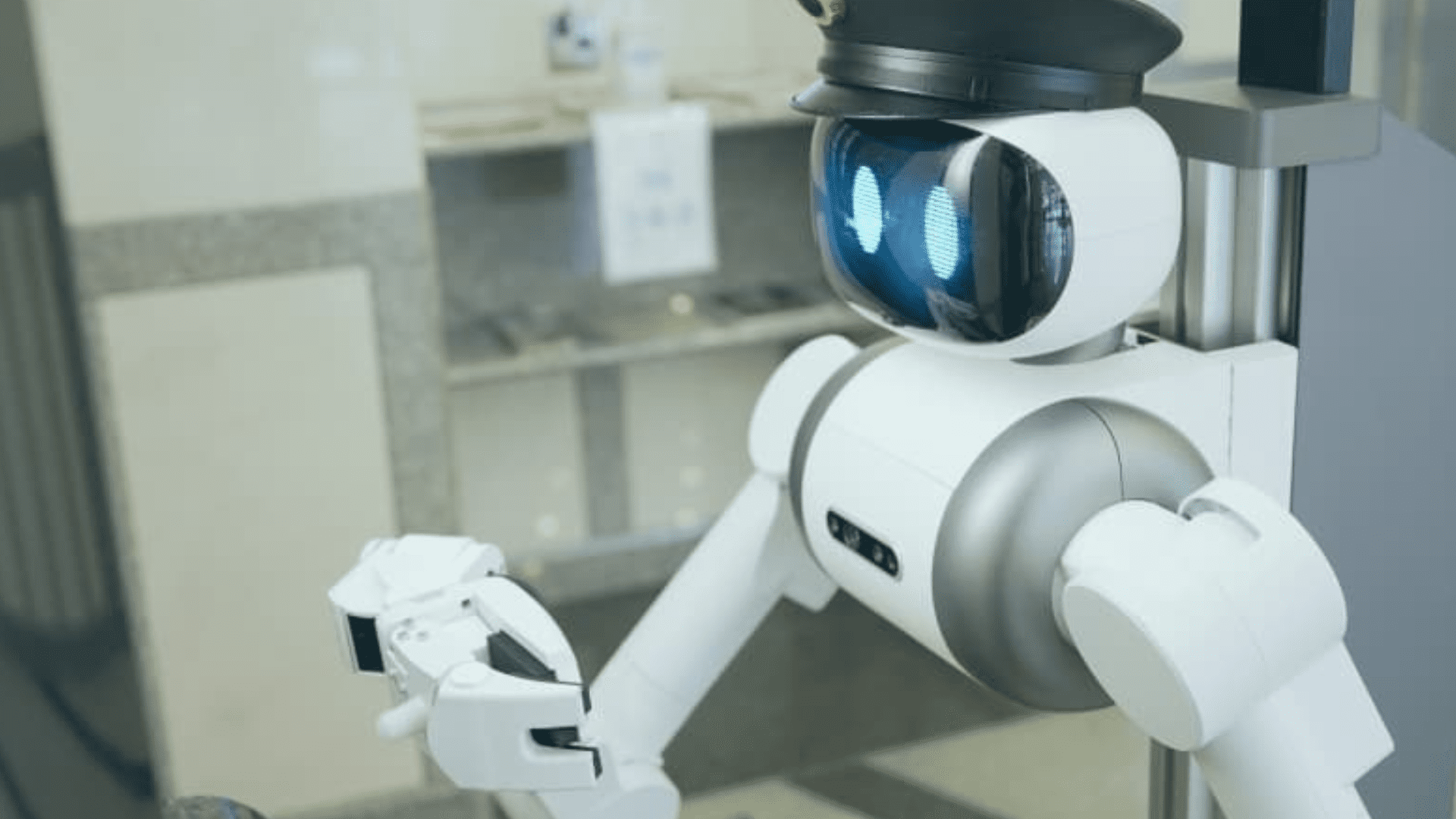

A U.S. biotech company and a Japan-based robotics company joined forces to integrate an AI “nose” onto a humanoid robot, developing the world’s first robot with a “functional sense of smell.”

AI RoboNose Smells Trouble

The U.S. biotech company Ainos installed its AI Nose olfaction module on a humanoid robot developed by Ugo, Inc. Incorporating a “sense of smell” unlocks new capabilities in the robotic world. Ainos’ AI Nose system uses a “high-precision” gas sensor array to identify volatile organic compounds (VOCs) or scents. An advanced AI system digitizes and identifies these scents into Smell IDs. Then, the Smell ID is used to detect odors and environmental conditions in real-time. According to Ainos, it mirrors a human’s ability to smell.

“This marks a turning point for AI-powered sensing,” said Chun-Hsien (Eddy) Tsai, the President and CEO of Ainos. “With smell added to the sensory stack, robots can now understand their environments in ways previously reserved for living beings.”

He continued, “In my opinion, it’s a game-changer for healthcare, industry, and everyday life.”

ugo’s CEO, Ken Matsui, added, “Olfaction is a key missing piece in robot perception. By integrating Ainos’ AI Nose, we’re giving our robots the missing sense – one that will transform how they navigate and interact with real-world spaces.”

When thinking of a sense of smell, the AI Nose goes beyond what they call “novelty” smells. It’s smart manufacturing detects gas leaks, chemical anomalies, and process deviations in real time. Both companies envision the robot contributing to workplace and facility safety, such as identifying hazardous materials before humans.

In addition, they also see the smelling robot being deployed in healthcare and elderly care facilities. For example, it could be used to monitor hygiene, infections, or early disease indications. Additionally, consumer applications are possible in smart homes and personal wellness.

What’s Next

Following the installation of the AI nose, the two companies are entering a critical developmental phase. During this time, they will develop a user interface and control system. Additionally, engineers will fine-tune the sensory parameters and response logic. This crucial step is projected to last about 2-4 weeks.

After this step, the system will go through real-world development tests in active environments, including commercial and public spaces. Tests include real-time odor detections and safety alert demonstrations.