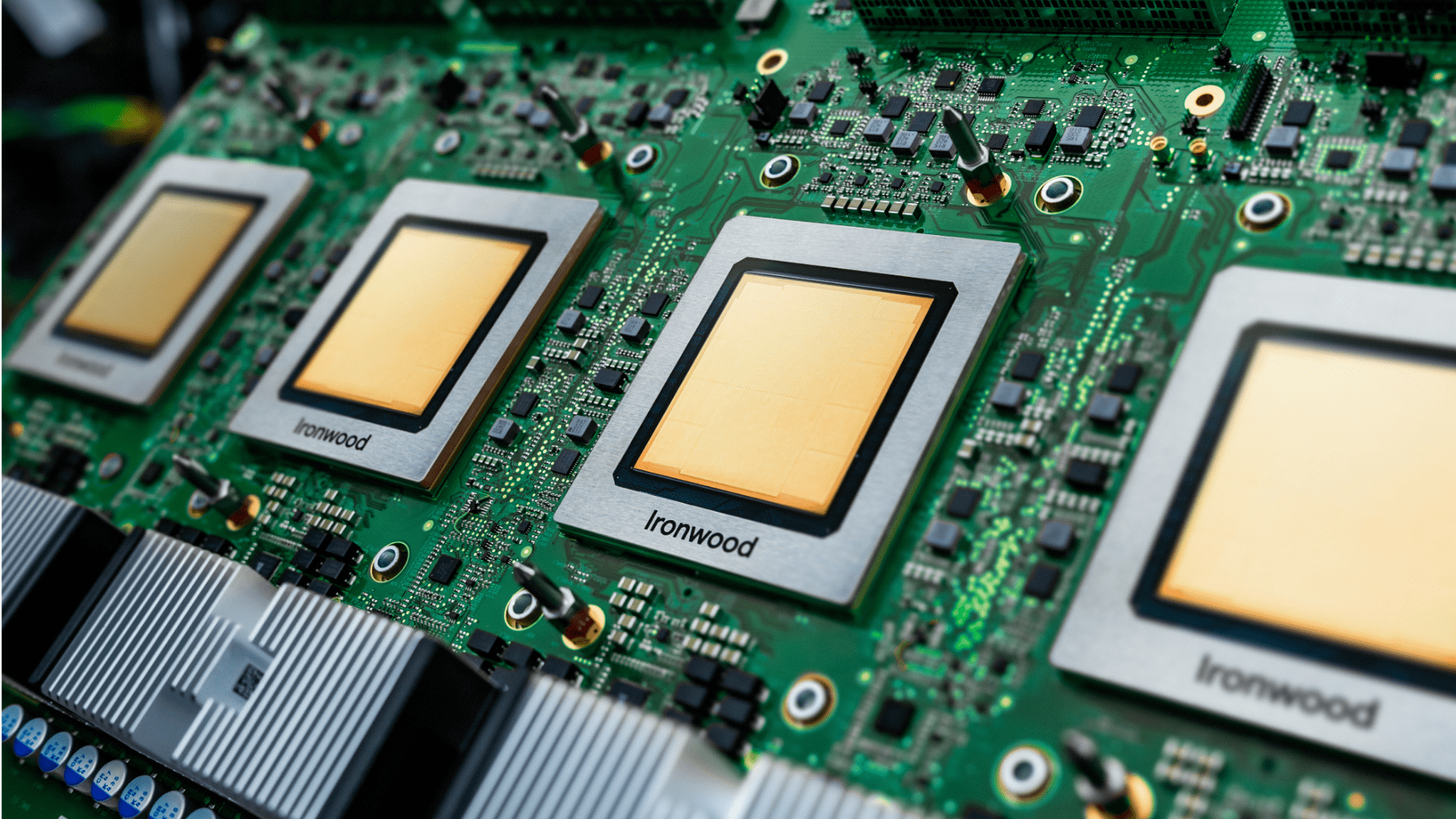

Google unveiled the latest generation of its TPU AI accelerator chip during its Cloud Next conference. The new chip, Ironwood, is the first TPU AI accelerator chip optimized for inference, or “thinking models.”

Ironwood AI Chip

Ironwood is Google’s seventh-generation TPU, and the company claims it’s the “most powerful, capable, and energy-efficient TPU yet.” The AI accelerator chip is reportedly designed to support the next phase of generative AI. Google says that Ironwood is its most “performant and scalable custom AI accelerator to date.”

According to Google, Ironwood’s development represents a significant shift in the development of AI and the infrastructure that powers it. It’s moving from “responsive” AI, where data is given to a user to interpret, to a “proactive” generation of insights that the company calls “the age of inference.” The company says rather than an AI model only providing data, it will retrieve and generate data to deliver insights and answers.

According to Google, it is one of several new components of the Google Cloud AI Hypercomputer architecture.

Ironwood can reportedly deliver 4,614 TFLOPs of computing power at peak. Each chip has 192GB of dedicated RAM with bandwidth approaching 7.4 Tbps. In addition, Google claims that Ironwood supports more than 24x the computing power of the world’s largest supercomputer, El Capitan.

Ironwood also features an enhanced SparseCore, a specialized accelerator for processing ultra-large embeddings common in advanced ranking and recommendation workloads.

In a statement, Google said, “These breakthroughs, coupled with a nearly 2x improvement in power efficiency, mean that our most demanding customers can take on training and serving workloads with the highest performance and lowest latency, all while meeting the exponential rise in computing demand.”

The Ironwood chip is scheduled to launch later this year for Google Cloud customers.