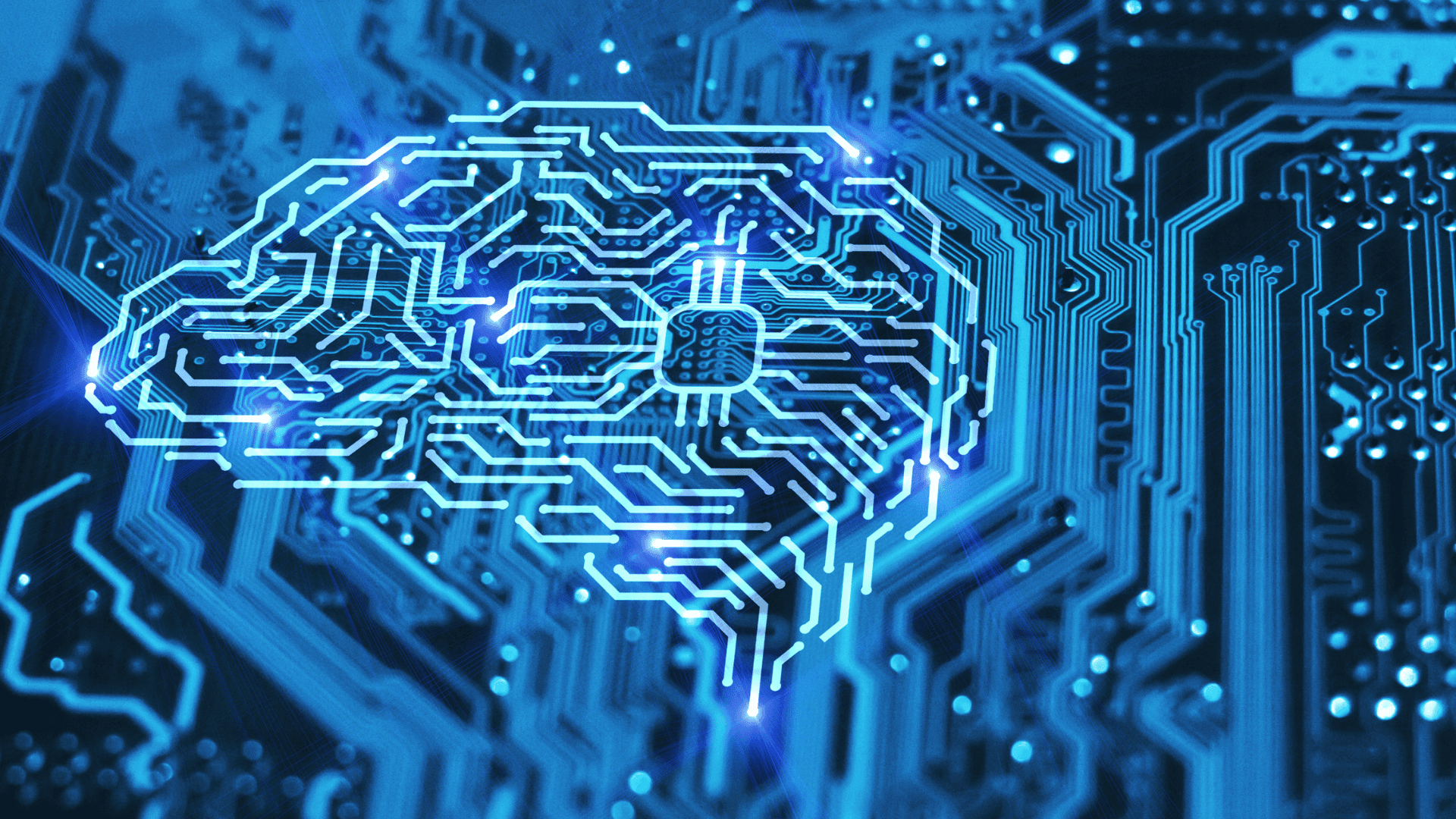

The Arc Prize Foundation, a nonprofit co-founded by prominent AI researcher François Chollet, recently announced that it created a challenging test called ARC-AGI-2 to measure the general intelligence of leading AI models. The test has reportedly stumped most AI models.

ARC-AGI Tests

According to the Arc Prize leaderboard, “reasoning” AI models like OpenAI’s o1-pro and DeepSeek’s R1 score between 1% and 1.3% on the new test. Powerful non-reasoning models, such as GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Flash, score around 1%.

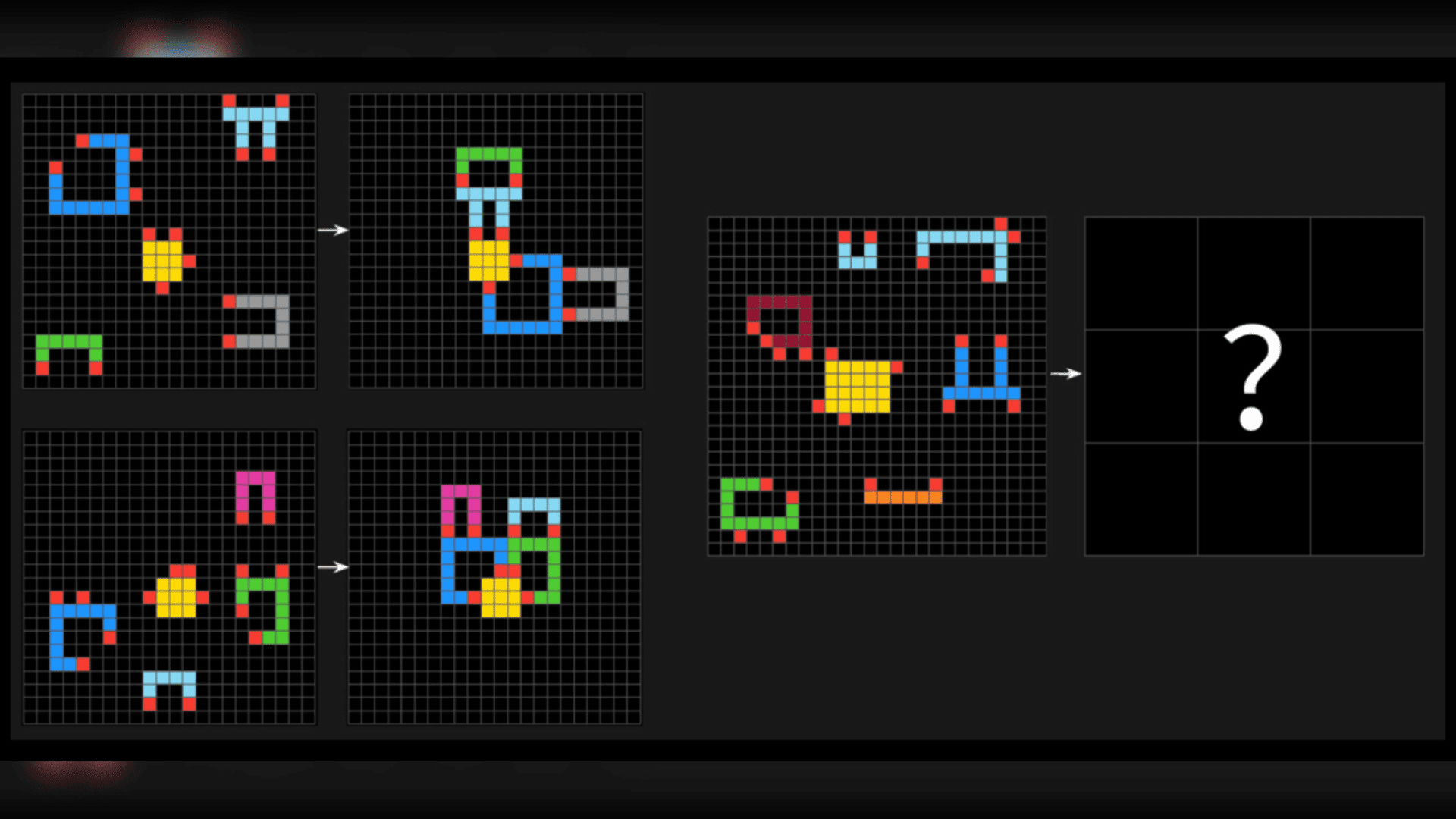

The ARC-AGI tests involve puzzle-like problems in which an AI model is tasked with identifying visual patterns from a series of different-colored squares and generating the correct “answer” grid. The problems are meant to test the AI’s intelligence by forcing it to adapt to new problems with which it is unfamiliar. This establishes whether an AI system is capable of acquiring new skills outside of the data it was trained on.

To establish a human baseline for the test, the Arc Prize Foundation asked over 400 people to take ARC-AGI-2. The human test subjects scored an average of 60% correct, far exceeding the AI models.

In a post on X, Chollet stated that ARC-AGI-2 is a better measurement of an AI mode’s intelligence than the first iteration of the test (ARC-AGI-1) because the new test prevents AI models from relying on extensive computing power to solve problems.

ARC-AGI-1 was undefeated for approximately five years after its creation until OpenAI released its advanced reasoning model, o3. This AI model managed to outperform all other AI models and match human performance on the test.

Though the OpenAI model impressively scored 75.7% on the ARC-AGI-1, it only scored 4% on the ARC-AGI-2 while using $200 of computing power per task. Along with the new test, the Arc Prize Foundation announced a new Arc Prize 2025 contest, which challenges developers to reach 85% accuracy on the ARC-AGI-2 test while only spending $0.42 per task.

“Intelligence is not solely defined by the ability to solve problems or achieve high scores,” Arc Prize Foundation co-founder Greg Kamradt wrote in a blog post. “The efficiency with which those capabilities are acquired and deployed is a crucial, defining component. The core question being asked is not just, ‘Can AI acquire [the] skill to solve a task?’ but also, ‘At what efficiency or cost?’”