Nuclear energy has a long history of innovation, from Marie Curie’s discovery of radium and its radioactive properties in 1898 to Enrico Fermi’s first self-sustaining nuclear chain reaction in 1942. However, the use of nuclear power, which is how humans harness the heat from atomic fission to generate electricity, did not come into fruition until 1951 at what is today Idaho National Laboratory (INL), the birthplace of nuclear power.

When Was Nuclear Power Invented: EBR-1 and the 1950s

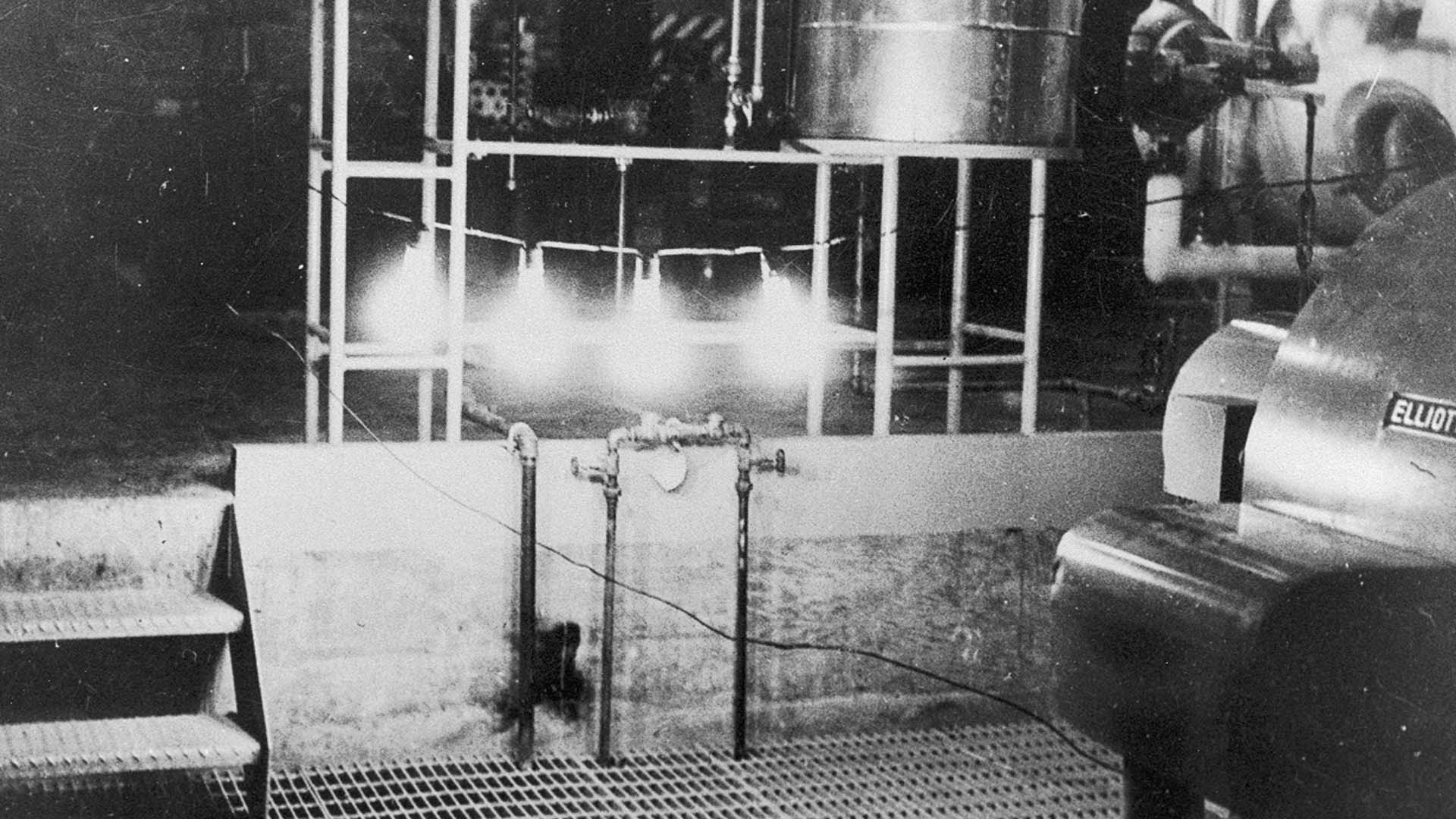

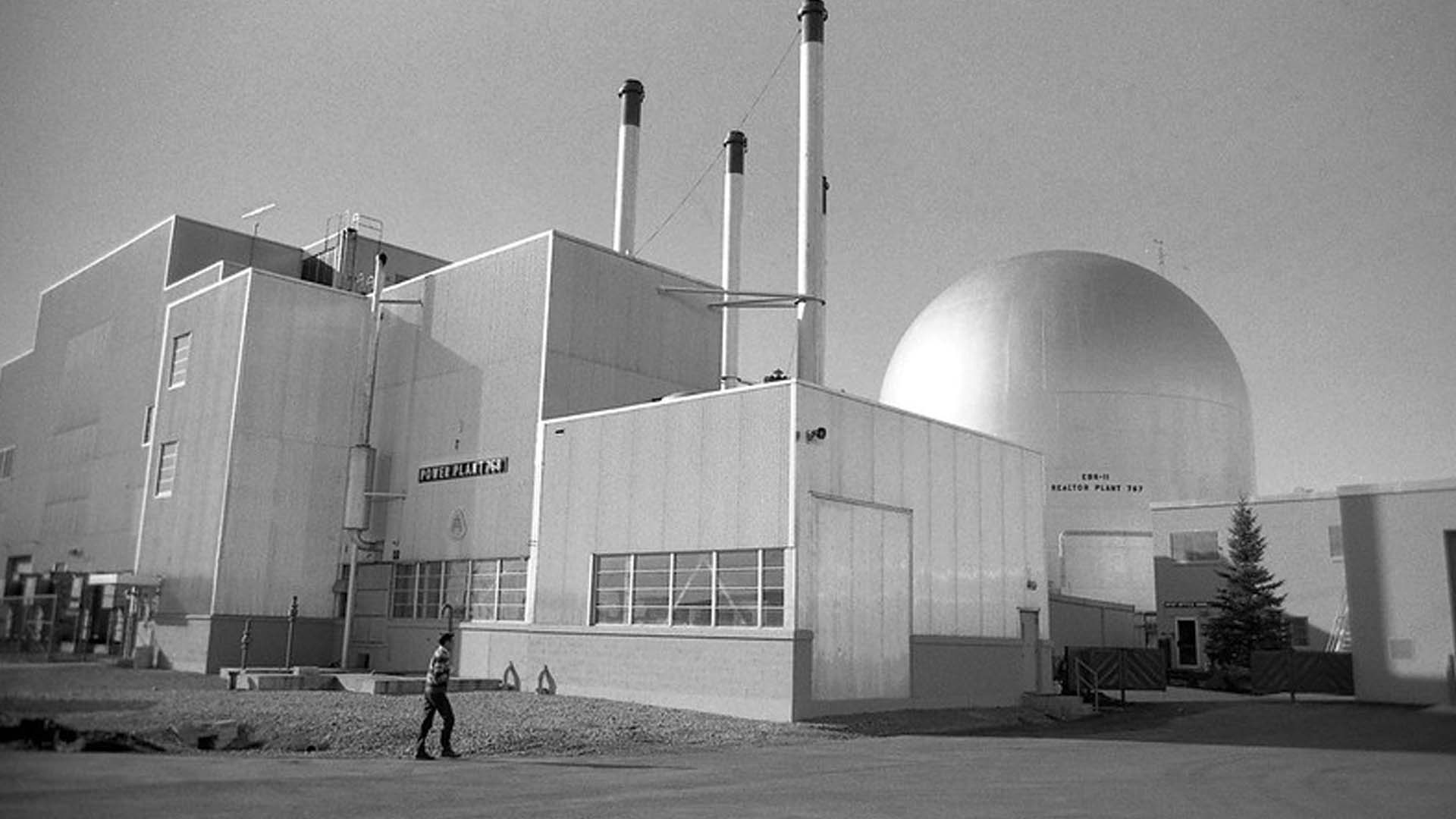

In 1949, the National Reactor Testing Station was established in Idaho. Argonne National Laboratory engineers began construction on the Experimental Breeder Reactor No.1 (EBR-I) with the goal to research, test, and understand how nuclear energy can bring clean power to the country. On December 20, 1951, EBR-1 successfully lit four 200-watt light bulbs using nuclear fuel.

Photo Credit: INL

This marked the first time in history that a usable amount of electricity was generated using nuclear energy and fission. EBR-I’s success generated confidence in reactor research programs, demonstrated the benefits of materials testing, and paved the way for the future of nuclear power usage.

For example, on July 17, 1955, four years after EBR-I first operated, a nuclear power plant demonstration operated by Argonne National Laboratory called BORAX III generated enough electricity to power the entire nearby town of Arco, Idaho.

Meanwhile, the former Soviet Union opened the world’s first civilian nuclear power plant in June 1954 in Obinsk. This nuclear-powered electricity generator was called the AM1, or Atom Mirny, otherwise known as the peaceful atom. AM-1 produced electricity until 1959. Following this, the first commercial nuclear power plant opened in Shippingport, Pennsylvania in 1957.

Nuclear Power Progresses: 1960s to the 1980s

Throughout the 60s and 70s, utility companies saw nuclear energy as economical, environmentally clean, and safe, and lots of nuclear reactors were built for making electricity. In 1974, France even decided to make nuclear energy its main source of power, ending up with 75% of their electricity coming from nuclear reactors. In this time, the U.S. built 104 reactors and received about 20% of its electricity from them.

However, eventual labor shortages and construction delays increased the cost of nuclear reactors and slowed their growth. This coincided with two major nuclear reactor accidents that further decreased nuclear power production: Three Mile Island in 1979 and Chernobyl in 1986.

Chernobyl occurred because of an unsafe reactor design and irresponsible operating practices that have never been allowed outside the former Soviet Union. Three Mile Island occurred when a reactor’s coolant valve opened and did not reclose when it was supposed to. When the valve’s instrumentation wrongly indicated that it was closed, coolant water was not distributed properly and caused the reactor core to partially meltdown before coolant water was restored. The Pennsylvania Department of Health followed the health of 30,000 people who lived within 5 miles of Three Mile Island, and the study was discontinued after 18 years when no evidence of unusual health effects was shown.

These two incidents further slowed the research and deployment of nuclear reactors due to the negative public perception of nuclear power and tighter regulations that increased their prices. Public support for nuclear energy fell from an all-time high of 69% in 1977 to 46% in 1979 as a result of Three Mile Island. However, the incidents taught engineers a great deal about the safety of nuclear reactors, improving the safety of reactors and plants, with both becoming better regulated, designed, and operated. The nuclear industry also established the Institute of Nuclear Power Operations in 1979 to further foster safety and training.

In 1986, passive safety tests at EBR-II, building upon the world’s first nuclear reactor EBR-I, proved that advanced reactors can be designed to be substantially safer. In the 1986 experiment, engineers disabled all automatic safety systems and turned the primary pumps off. Without the coolant flowing, static coolant rose to the surface, dissipated heat, and fell to the bottom again, forming its own convection current. In other words, the reactors shut themselves down automatically, proving that their design was inherently safe.

Increased Use and Safety: 1990s to Today

The nuclear industry became even safer after the nuclear incident in Fukushima, Japan in 2011. It was caused after an earthquake and deadly tsunami hit Japan, eliminating off-site power supplies and flooding the backup generators that powered the reactor cooling systems. The reactors overheated and released hydrogen, which is explosive under certain circumstances but is not radioactive.

After the disaster, the United States Nuclear Regulatory Commission (U.S.NRC) issued orders to nuclear energy facilities to prevent anything close to Fukushima from happening again, specifically addressing nuclear facilities’ ability to withstand earthquakes and massive flooding. For example, they require hardened cooling vents that have containment designs and install a second tier of reliable fuel pool level instrumentation to existing reactors.

By the 2000s and 2010s, the phenomenal safety record of the U.S. commercial reactor fleet combined with the ongoing fear of global climate change due to carbon emissions from using fossil fuels for energy brought about talk of a nuclear renaissance. Nuclear power does not emit any pollution or greenhouse gas while generating electricity and can run 24/7, supplementing other zero-emission resources like solar and wind power.

As of 2021, there are 443 nuclear reactors operating in 32 countries around the world. In 2020, these provided about 10% of the world’s electricity and 20% of the United States’ electricity. According to the Nuclear Energy Institute, the United States avoided about 476 million metric tons of carbon dioxide emissions in 2019 because of the use of nuclear power plants—equivalent to removing 100 million gas-powered cars from the road.

The Future of Nuclear Power

The future of nuclear power is exciting, hopeful, and integral for reaching the worldwide goal of net-zero carbon emissions by 2050. One aspect in the future of nuclear power is small modular reactors and microreactors, built by organizations like Westinghouse and NuScale. These are modular nuclear reactors that are easier and quicker to build than traditional reactors. They are beneficial for remote places that don’t have reliable and affordable power like Alaska or in the wake of natural disasters that cause power outages.

Another big innovation is advanced nuclear reactors which are now being built to be used in a broader range of applications. For example, Oak Ridge National Laboratory (ORNL) is supporting the design of advanced nuclear reactors with a hotter outlet temperature so they can provide heat for chemical industries that currently use natural gas. Since natural gas is a fossil fuel, using nuclear energy instead is much better for the environment.

Additionally, nuclear innovation company TerraPower’s molten chloride fast reactor (MCFR) technology, which is a type of molten salt reactor, also has the potential to operate at higher temperatures than conventional reactors, thus generating electricity more efficiently and without emissions. Its unique design also offers the potential for process heat applications and thermal storage.

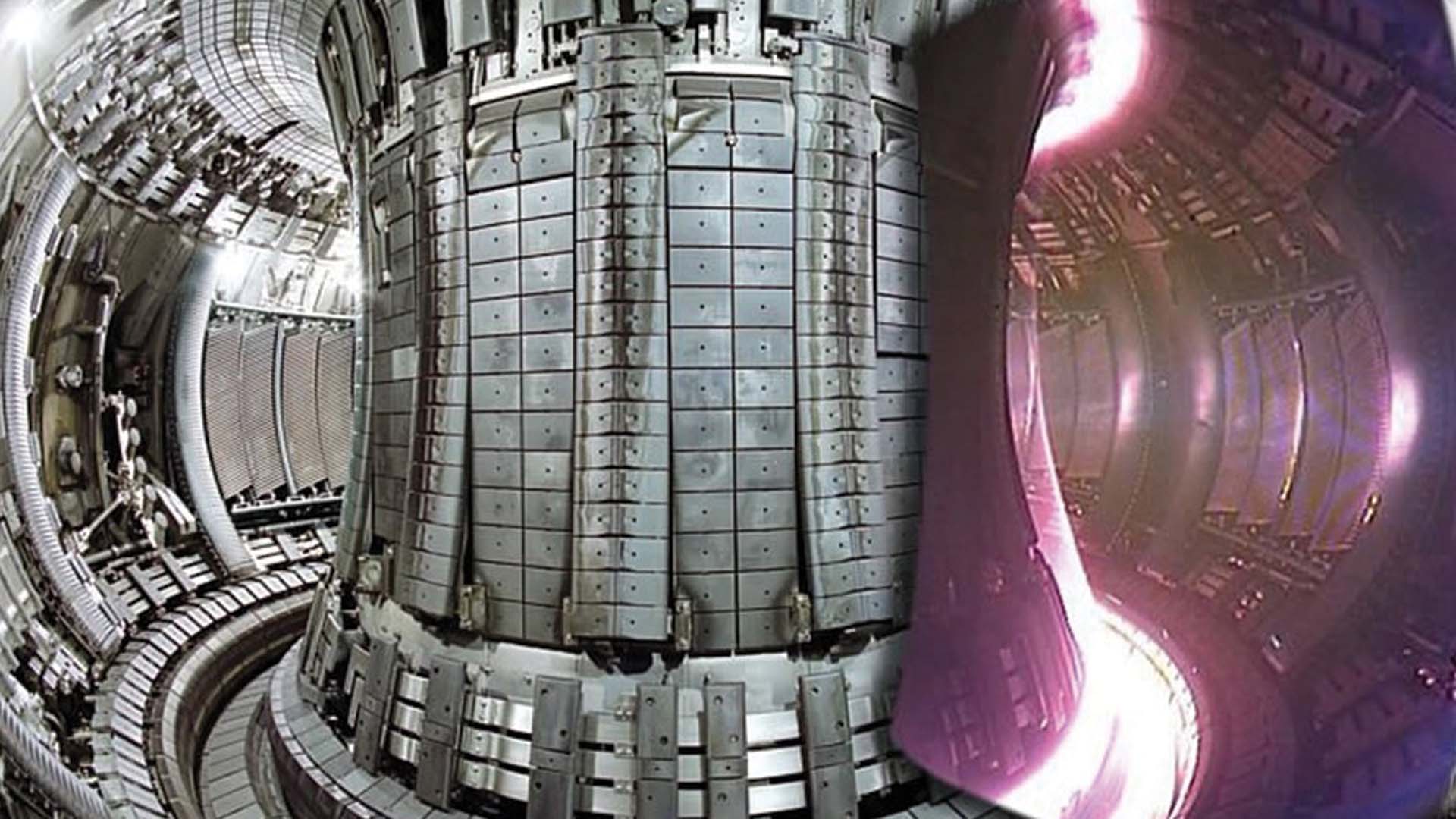

One of the biggest innovations for the future of nuclear power involves using nuclear fusion instead of fission to generate power. Whereas nuclear fission breaks apart heavier atoms and produces radioactive fission products, nuclear fusion occurs when two light nuclei smash together to form a single, heavier nucleus. Fusion could deliver even more power in a safer way with limited long-lived radioactive waste, and fusion reactors are expected to have a much lower risk for nuclear proliferation as compared to current nuclear reactors. Additionally, a fusion reaction is about four million times more energetic than a chemical reaction, such as burning coal, oil, or gas.

Utilizing fusion for nuclear power would be a massive scientific breakthrough and a monumental step forward for eliminating the need for fossil fuels. But harnessing fusion for nuclear power is no easy task; in order for fusion to occur, you need a temperature of at least 150,000,000 degrees Celsius (about six times the temperature of the Sun’s core).

Photo Credit: ITER

The world’s largest fusion project, a collaboration between 35 nations, is the ITER Project which is being assembled now in southern France. The goal is to build the world’s largest tokamak which is a magnetic fusion device designed to prove the feasibility of fusion as a large-scale and carbon-free source of energy. It is currently understood how to heat the plasma; the main challenge is to make the plasma’s heat self-sustaining. By the mid-2020s, the project hopes to integrate the operation of systems at plant scale to demonstrate that they work. By the mid-2030s, the team hopes to have a full deuterium-tritium operation, which is the fuel portion of the ITER operations, which will demonstrate a self-sustaining plasma.

Find out more about nuclear energy HERE.